tldr;

The development of ChatGPT is a testament to the remarkable progress made in natural language processing (NLP), a subfield of artificial intelligence. Through a series of groundbreaking innovations, NLP models have evolved from simple word counting to sophisticated systems that can understand and generate human-like language. ChatGPT is the culmination of these advancements, leveraging techniques like word embeddings, transformers, and generative pre-training to engage in coherent conversations, generate text, and assist users with a wide range of language-based tasks. As NLP continues to advance, we can expect even more remarkable developments in human-machine communication.

Where did it all begin?

Attention, AI enthusiasts! Enter the fascinating realm of Natural Language Processing (NLP) as we embark on a journey to unravel the secrets behind the incredible intelligence of ChatGPT. Tracing its roots through a rich lineage of technological advancements, prepare to discover how ChatGPT evolved from humble beginnings to its groundbreaking status as a conversational phenomenon. Dive into the world of Bag of Words, Word Embeddings, LSTMs, Transformers, and BERT, as they paved the way for the Generative Pre-Training (GPT) models that ultimately culminated in ChatGPT’s remarkable capabilities. Here, we’ll peel back the layers of innovation, revealing the intricate tapestry of breakthroughs that led to this AI marvel.

Natural Language Processing has been active field of development since the 1990s, but as far back as 1954 Zellig Harris coined the term Bag of Words in his seminal paper, “Distributional Structure.”

Bag of Words

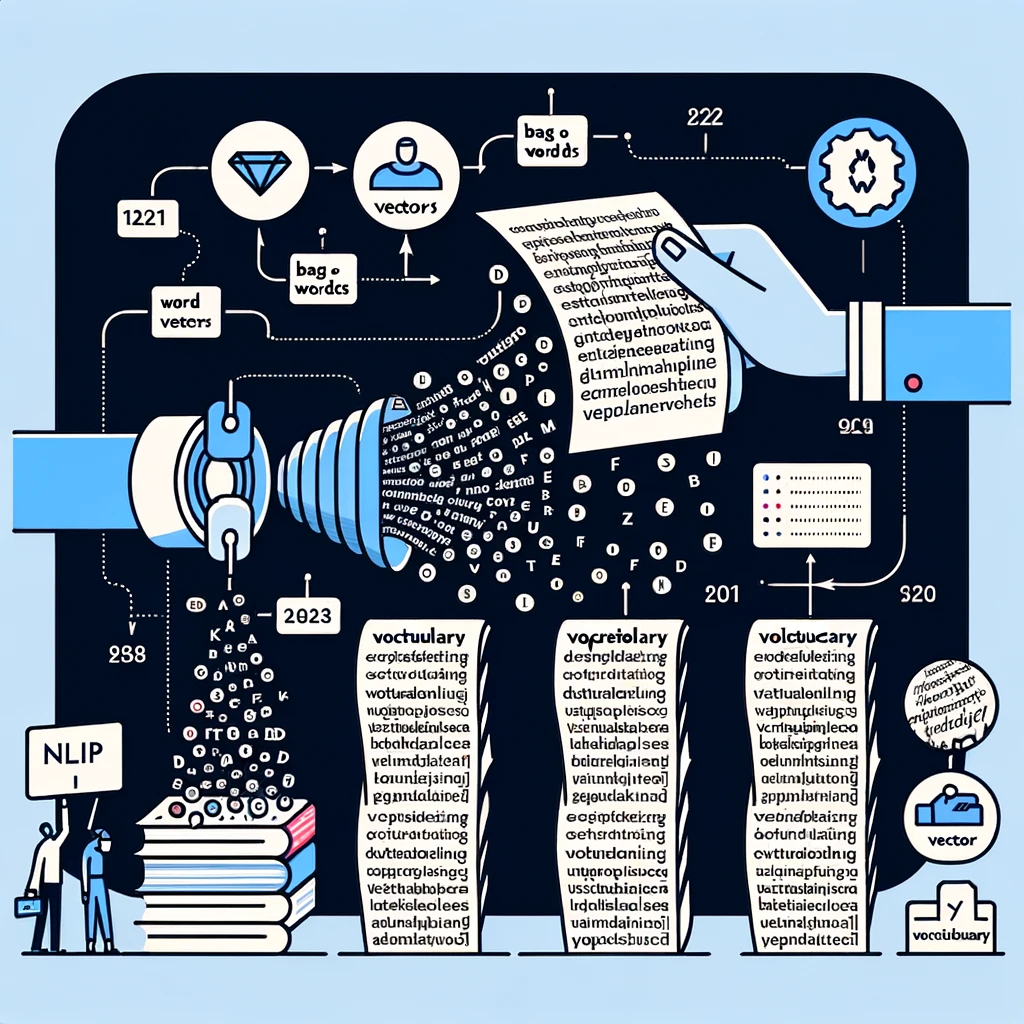

The Bag of Words (BoW) model is a fundamental approach in Natural Language Processing (NLP) used to convert text documents into numerical vectors or “bags” of words. This model disregards the order of words and focuses solely on the frequency of each word’s occurrence within a document. Here’s how it works: First, a vocabulary of unique words from the entire set of documents (corpus) is created. Then, each document is represented as a vector, where each dimension corresponds to a word in the vocabulary. The value in each dimension is the count of how often that word appears in the document. For example, if our vocabulary is [“the”, “quick”, “brown”, “fox”], and our document is “the quick fox”, the BoW representation would be [1, 1, 0, 1], indicating “the” appears once, “quick” once, “brown” not at all, and “fox” once. This model is widely used in tasks like document classification and sentiment analysis because it transforms text into a numerical form that machine learning algorithms can process.

The Scikit learn library shows an easy to get started with a bag-of-words model in python.

Word Embeddings

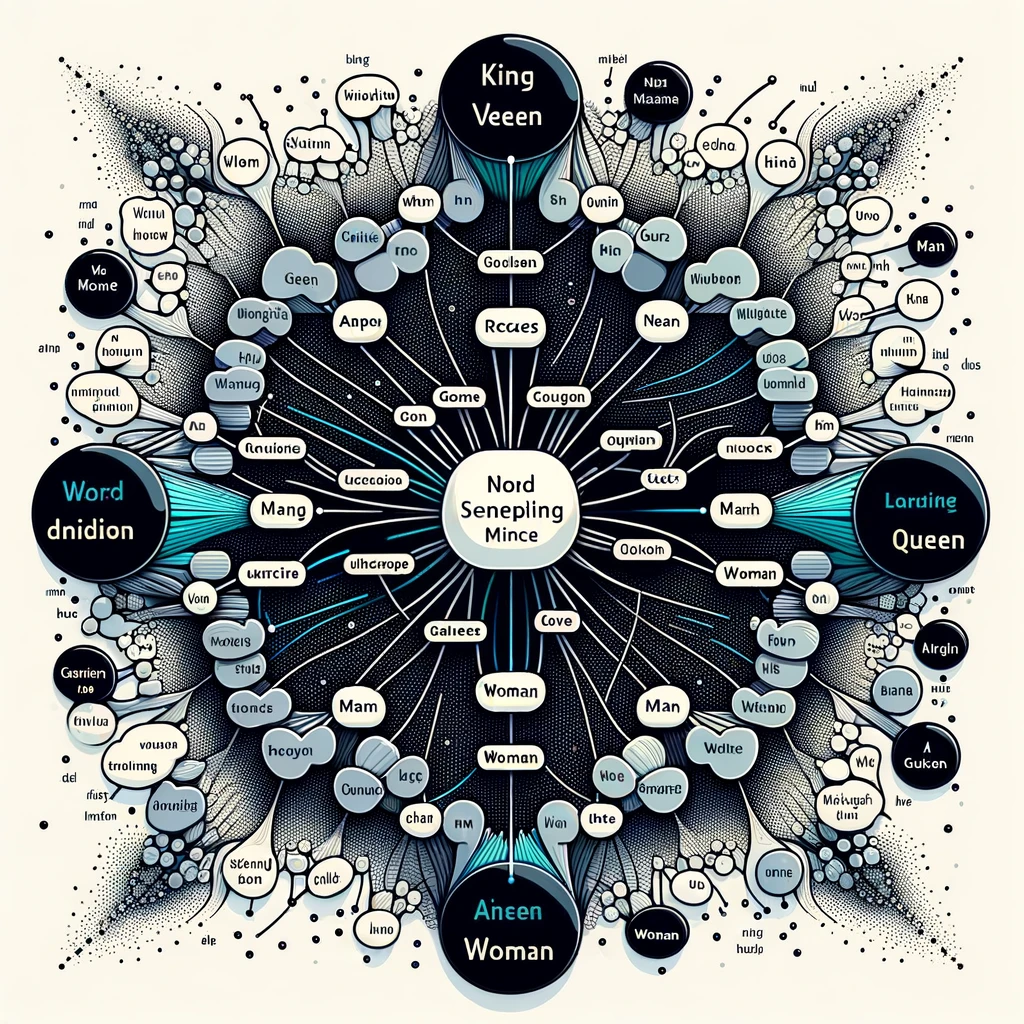

Word2Vec and GloVe (Global Vectors for Word Representation) are two prominent models used for obtaining vector representations of words, known as word embeddings. These models transform words into high-dimensional space where semantically similar words are positioned closer to each other. Word2Vec, developed by a team led by Tomas Mikolov at Google, offers two architectures: Continuous Bag of Words (CBOW) and Skip-Gram, both of which predict words based on their context, but in slightly different manners. CBOW predicts a target word based on its context, whereas Skip-Gram does the opposite, predicting the context given a word. GloVe, developed by researchers at Stanford, combines the advantages of two main model families in word representation: global matrix factorization and local context window methods. It does so by constructing a co-occurrence matrix that counts how often words appear together in a given corpus, and then it uses matrix factorization techniques to find the word embeddings. The result is a set of vectors where each word’s position in the vector space captures its semantic meaning based on its co-occurrence with other words.

Stanford’s Glove Project is still available on their website where you can read the original paper and see example.

LSTMs (2016)

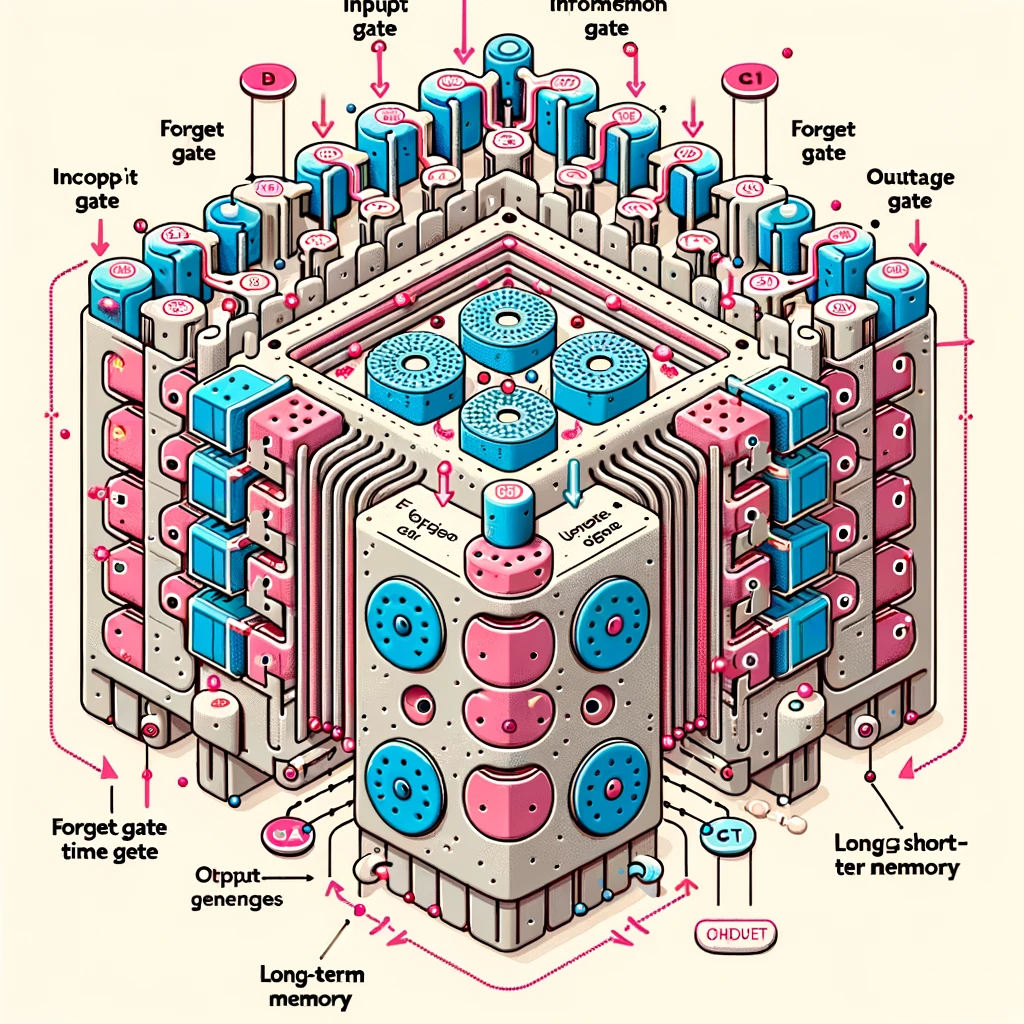

Long Short-Term Memory (LSTM) networks are a special kind of Recurrent Neural Network (RNN) capable of learning long-term dependencies. Traditional RNNs struggle with the vanishing and exploding gradient problems, especially with sequences of significant length, which hinders their ability to remember information from early inputs as the sequence progresses. LSTMs address this issue with a unique architecture that includes three gates: an input gate, an output gate, and a forget gate. These gates regulate the flow of information into and out of each cell, deciding what to keep in memory, what to discard, and what to pass on to the next time step. This mechanism allows LSTMs to maintain a longer memory and is particularly useful for applications in time series analysis, natural language processing, and more complex sequence prediction tasks where the context from far back in the sequence is essential for making accurate predictions.

Want to learn more? Read the Long Short Term Memory paper on Arxiv.

Transformers (2017)

The landmark paper “Attention is All You Need,” published in 2017 by Vaswani et al., introduced the Transformer model, revolutionizing the field of natural language processing (NLP). The core innovation of the Transformer is its reliance solely on attention mechanisms, moving away from the recurrent layers used in previous models like RNNs and LSTMs. This approach allows for significantly improved parallelization during training, drastically reducing computation time and enabling the model to scale to unprecedented data sizes. The Transformer architecture is based on self-attention mechanisms that weigh the significance of different parts of the input data differently, enabling the model to focus on relevant parts of the input sequence when performing tasks. This capability makes it exceptionally adept at handling a wide array of NLP tasks, including translation, summarization, and text generation, by capturing complex dependencies and relationships within the text. The Transformer’s design laid the groundwork for subsequent advancements in NLP, including the development of models like BERT, GPT, and others, which have continued to push the boundaries of what’s possible in machine learning and AI.

Generative Pre-Training (June 2018)

The series of Generative Pre-Training (GPT) models developed by OpenAI represents significant advancements in the field of natural language processing and generation. Each iteration of the GPT model has built upon its predecessor, introducing improvements in architecture, training data size, and complexity.

GPT (Generative Pre-Training)

Introduction: Presented in the paper “Improving Language Understanding by Generative Pre-Training” (2018), GPT was the first in the series. It combined unsupervised pre-training with supervised fine-tuning, using a Transformer-based architecture.

Key Features: GPT used a 12-layer Transformer, was trained on the BooksCorpus dataset, and showcased the effectiveness of pre-training on a large corpus of text followed by fine-tuning for specific tasks.

Differences: Compared to its successors, GPT had a relatively smaller model size and was trained on a more limited dataset.

BERT (Oct 2018)

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking NLP model introduced by Google in 2018 that fundamentally changed the landscape of language understanding tasks. Unlike previous models that processed text in a linear or unidirectional manner, BERT is designed to analyze text bidirectionally, allowing it to grasp the context of a word based on all of its surroundings (both left and right of the word). This is achieved through a mechanism called “masked language model” (MLM), where some percentage of the input tokens are randomly masked, and the goal is for the model to predict the masked words based on the context provided by the non-masked words. This approach enables BERT to develop a deep understanding of language context and nuance, significantly improving performance on a wide range of tasks, including question answering, sentiment analysis, and language inference. BERT’s architecture has been the foundation for many subsequent models, further pushing the boundaries of what’s possible in NLP.

Dive deeper by reading the paper on BERT.

GPT-2 (Feb 2019)

Enhancements: Released in 2019, GPT-2 expanded on the original model with a significantly larger architecture and dataset. It featured up to 48 Transformer layers and was trained on a dataset of 8 million web pages, called WebText.

Key Features: GPT-2 demonstrated remarkable improvements in text generation, capable of producing coherent and contextually relevant paragraphs of text. It was not initially released in full due to concerns over potential misuse.

Differences: The primary differences from GPT were its larger size, more extensive training data, and improved generation capabilities, making it far more powerful than the original GPT model.

GPT-3 (May 2020)

State-of-the-Art: Launched in 2020, GPT-3 set a new standard with its colossal architecture and training corpus. It features 175 billion parameters, dwarfing its predecessors and most other models in existence at the time.

Key Features: GPT-3’s size allows for an unprecedented level of understanding and generation capabilities, enabling applications from writing and summarization to coding and beyond, often requiring little to no fine-tuning.

Differences: GPT-3’s main distinctions lie in its massive scale and the introduction of few-shot learning, where the model can perform tasks given only a few examples, showcasing its versatile understanding of language and context.

InstructGPT (Jan 2022)

InstructGPT, introduced by OpenAI, is an evolution of the GPT (Generative Pre-trained Transformer) models, specifically designed to follow instructions more accurately and produce outputs that are more aligned with human values. This model architecture was developed in response to feedback on earlier versions of GPT, which, while powerful, sometimes produced outputs that were unhelpful, biased, or not precisely what the user requested.

Key Features of InstructGPT:

1. Fine-Tuning with Human Feedback: InstructGPT is fine-tuned using a dataset of human feedback, which includes rankings of model outputs according to how well they follow given instructions. This process, known as Reinforcement Learning from Human Feedback (RLHF), significantly improves the model’s ability to understand and execute specific tasks as instructed by users.

2. Incorporation of Preferences and Values: Through the human feedback loop, InstructGPT is trained not just to understand language better but to align its responses with ethical guidelines and user preferences. This makes it more suitable for real-world applications where understanding nuanced human instructions and values is crucial.

3. Versatility and Generalization: Despite being fine-tuned on human preferences, InstructGPT retains the versatility of its predecessor GPT models, capable of performing a wide range of natural language processing tasks. It can generate text, answer questions, summarize content, and more, with a greater emphasis on adhering to the user’s intent.

4. Iterative Improvement: The model architecture allows for continuous updates and refinements based on ongoing human feedback, ensuring that it remains relevant and aligned with evolving human values and societal norms.

InstructGPT represents a step towards creating AI models that are not only powerful in processing and generating language but also in understanding and executing tasks in a way that better meets human expectations and ethical standards. This approach aims to make AI interactions more intuitive, helpful, and safe for users.

In summary, the development of ChatGPT is a testament to the remarkable progress made in the field of artificial intelligence, particularly in the area of natural language processing. The journey from Bag of Words to ChatGPT involved a series of groundbreaking innovations, each building upon the previous one. Word Embeddings allowed for the representation of words in a meaningful way, while LSTMs and Transformers revolutionized the way sequential data was processed. BERT’s bidirectional processing and GPT’s generative capabilities further enhanced the models’ understanding and generation of natural language. Finally, InstructGPT’s focus on following instructions and aligning with human values represents a significant step towards more ethical and user-centric AI systems. The culmination of these advancements has resulted in ChatGPT, an AI model that can engage in coherent conversations, generate human-like text, and assist users with a wide range of language-based tasks. As research continues to push the boundaries of NLP, we can anticipate even more remarkable developments in the future, further blurring the lines between human and machine communication

*This article was created with the help of chatGPT and DALL-E